Optable Blog

Learn about the modern advertising landscape and how Optable's solutions can help your business.

The shift toward first-party data, privacy-by-design systems, and interoperable identity frameworks has reshaped what publishers need from their data infrastructure. Most publishers now face a familiar challenge: they capture audience interactions across web and app environments, but the underlying signals are shallow, inconsistent, or difficult to activate in meaningful ways. Traditional DMPs and the remnants of cookie-based targeting aren’t enough to support modern audience strategy.

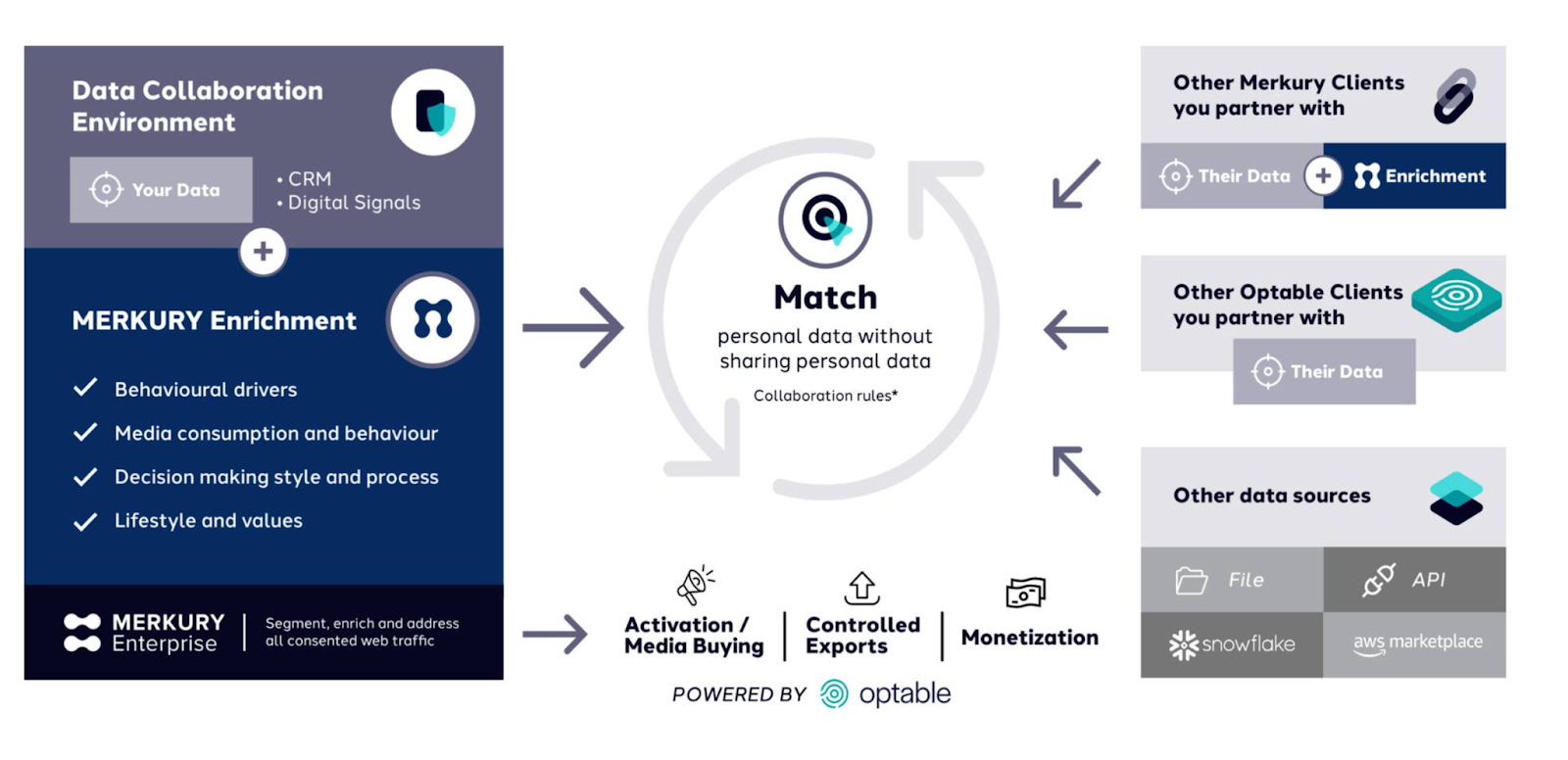

This is the gap Merkury fills.

Built by Dentsu Canada and powered by Optable’s clean-room technology, Merkury offers a scalable, privacy-compliant identity enrichment layer that plugs securely into a publisher’s existing ID graph. It is built on a market-specific identity dataset designed for the needs and nuances of Canadian audiences and is available in three tiers: a free “Flash” tier for exploration and overlap insights, a full-featured tier for enrichment and activation, and an enterprise tier for publishers who need continuous, high-volume identity resolution.

The Foundation: Understanding Canadians

Merkury’s dataset isn’t licensed, purchased, or inferred from a smattering of digital sources. It’s built on the largest agency-operated consumer benchmark available: Dentsu’s CCS. For over a decade, primary research on about 20,000 Canadians annually across more than 10,000 attributes has given insight into psychological drivers, decision-making patterns, media consumption, and lifestyle factors that basic signal or transactional data simply can’t capture. From this foundation, Merkury models identity enrichment across 600,000 unique postal codes, giving publishers granular audience understanding at scale.

How Merkury Works

1. Data Ingestion and Identity Resolution

Publishers typically start with fragmented identifiers—hashed emails, mobile IDs, login events, contextual signals, and first-party behavioral data. Merkury ingests these identifiers into Optable’s clean room, where they can be matched against Dentsu’s CCS-derived dataset to create a more complete, privacy-safe identity foundation.

For publishers with large amounts of anonymous traffic, the enterprise “Merkury-as-a-source” tier provides additional value by helping resolve more of that unaddressed audience and incorporating those identities into their own graph for future activation and monetization.

2. Attribute Enrichment

Once identity resolution is established, Dentsu’s Merkury dataset provides access to high-quality behavioral attributes that deepen publishers’ understanding of their audiences. Teams can enrich their internal profiles with traits such as:

- Psychological factors and behavioural drivers

Media consumption patterns and behaviour - Decision-making styles and mindsets

- Personal passions, lifestyle, and values

This enrichment transforms partially known users into meaningful audience profiles ready for segmentation, personalization, and monetization.

3. Seamless Collaboration

Optable’s clean room serves as the backbone of the collaboration layer, allowing publishers to:

- Collaborate with your partner advertisers or other Dentsu or Optable clients

- Support secure audience matching and overlap analysis

- Enhance audience profiles with advertiser-provided traits

- Enable lookalike modelling and reach extension

- Activate high-value, consented audiences

Merkury also benefits from Optable’s multi-partner management capabilities, making it easier to collaborate across many relationships within a single, streamlined environment.

4. Activation Across Channels

Once enriched, audiences can be activated across a buyer’s preferred channels through Optable’s integrations or Dentsu’s media ecosystem. Key activation paths include:

- Real-time activation to DSPs such as The Trade Desk, Amazon DSP, and Google DV360

- Activation to social platforms including Meta, TikTok, and X

- Activation through publisher ad-serving infrastructure like Google Ad Manager and Prebid

Merkury Key Values for Publishers

- Operational Simplicity: Rather than building enrichment, privacy, and activation layers independently, publishers can rely on Optable’s standardized clean-room pipeline for a faster, more efficient path to scale.

- Future-Proofing Against Signal Loss:

- A deterministic identity anchored in their own data

- Enriched profiles that don’t rely on external cookies

- Durable cross-channel addressability

- Audience data grounded in primary Canadian research, not modelled proxies or imported datasets

- Improved Advertiser Alignment: Merkury provides a shared identity structure and a collaborative clean-room environment, making it easier to align with advertiser needs and accelerate campaign setup.

A Modern Identity Infrastructure for Growth

The Dentsu x Optable partnership helps publishers extend the value of the identity data they already own. By combining Dentsu’s decades of understanding Canadians with Optable’s privacy-first collaboration layer, publishers can broaden their audience understanding, strengthen advertiser relationships, and enhance the value of every impression they sell.

Across its three Canadian tiers: exploration, full activation, and enterprise identity resolution, Merkury gives publishers the flexibility to grow at the pace their strategy demands. In a market where every signal counts, Merkury provides a stronger, more complete identity framework to build on.

Contact us at sales@optable.co to learn more about how Merkury can upgrade your data and audience strategy.

This article mentions findings from the State of Audience Data Monetization 2025 report, in partnership with Digiday. To explore all the survey results and insights, download the full report here→

For years, the industry operated on a simple premise: great content brings audiences, and audiences bring ad dollars. That's still true, but it's no longer enough. Advertisers aren't just buying reach anymore. They're asking harder questions: Who exactly are we reaching? What can you prove about performance? How do we know this is working?

Publishers who can't answer those questions are losing ground. Publishers who can, are building something more durable: direct relationships with advertisers, rooted in shared data and verified outcomes.

This is where data collaboration enters the picture—not as an innovation initiative, but as core infrastructure. Here's why it's becoming the foundation of audience monetization for leading publishers.

Advertisers Want Proof, Not Just Promises

Marketers today expect more than reach. They expect results.

Clean room–enabled data collaboration allows publishers to show exactly who they're reaching, how campaigns are performing, and what revenue outcomes they're driving. Instead of passively offering inventory, publishers who embrace collaboration can build custom audience segments with advertisers, activate campaigns using overlapped data, and measure performance together, securely and in real-time.

This closed-loop model is already familiar to platforms like Meta and Google. Now it's within reach for publishers. And they're moving quickly: 64% of publishers say they are already collaborating with advertisers via clean rooms for campaign planning and measurement.

The use cases reflect a clear focus on accountability, publishers are prioritizing attribution and measurement, high-value audience creation, and privacy-safe data licensing as their primary collaboration goals.

Direct Sales Is Evolving—Not Disappearing

There's a misconception that programmatic has fully overtaken direct sales. In reality, direct-sold advertising remains essential, especially when enriched with collaboration.

Yes, in 2025, 81% of publishers expected programmatic revenue to grow this year, while 73% expected direct-sold revenue to hold steady. But the two don't have to compete. Data collaboration enhances both, and it's particularly powerful for direct sales, giving teams more valuable, data-rich packages to bring to market.

When publishers can match first-party data with advertiser inputs in clean rooms, they unlock premium inventory deals rooted in verified insights, tailored audience segments that command higher CPMs, and stronger buyer relationships built on transparency and shared performance metrics.

The publishers gaining ground are the ones going to key advertisers, building custom plans from shared audience data, and running campaigns with closed-loop measurement. It's the same playbook the major platforms use—now accessible to publishers willing to invest in the infrastructure.

The Infrastructure Is Finally Publisher-Ready

Legacy clean rooms were technical, isolated, slow, and built for platform-scale players. That's changed drastically.

Publishers are investing heavily in the foundational layer that makes collaboration possible. 78% are either actively using or building an identity graph, and nearly all agree that integrating with demand partners' alternative ID frameworks is essential to their monetization strategy.

Optable enables this shift with code-free setup and audience matching, secure policy-governed collaboration across partners, fast integration with DSPs, SSPs, and ad servers, and real-time activation across environments—from web to CTV.

This means even mid-sized publisher teams can operate with the precision and agility once reserved for walled gardens.

Data Collaboration Isn't Optional Anymore

82% of publishers now say data collaboration is important or mission-critical to their monetization strategy.

That's not a trend, it's a structural shift in how publisher revenue gets built.

The monetization strategies that will thrive in a cookieless, privacy-centric world are the ones built on secure collaboration, direct advertiser relationships, and future-proof infrastructure.

Optable gives publishers the tools to activate first-party data with control and compliance, unlock premium direct deals through shared insights, and scale partnerships with agent-to-agent automation.

Want to see how it could work for your team?

Reach out to our team to book a personalized walkthrough or see a demo of Optable in action. Contact our experts now or email sales@optable.co to start the conversation.

Revenue, Reach, Resilience: Identity Strategy is a Top Priority for Publishers

The digital advertising landscape is undergoing seismic change, driven by a profound shift toward a privacy-first internet. With the deprecation of third-party cookies, the decreasing utility of mobile advertising IDs, and new privacy features that can reduce the precision of a user's IP address, the traditional foundation of audience targeting is completely transforming.

In addition, traditional identifiers that once enabled seamless cross-channel audience targeting are diminishing. Global privacy regulations like GDPR and CCPA, new browser technologies that actively limit cross-site tracking, and the expansion of device use are leaving publishers with fragmented data and growing signal loss.

74% of publishers and media networks are still heavily reliant on third-party cookies and shared identifiers to deliver on their monetization strategies.

Optable | Digiday Report

To succeed in this new ecosystem, publishers must fundamentally redefine addressability, creating direct connections with audiences, emphasizing trust, and ensuring data security.

Publishers and media owners who build strong, future-proof identity strategies rooted in first-party data, consent-driven strategies, and technologies that scale with privacy regulations will emerge as leaders.

Key drivers for adopting a comprehensive identity strategy include:

- Crafting a robust first-party and authentication strategy

- Deploying an identity foundation that supports new value-generating opportunities

- Supporting multiple identity providers to ensure maximum coverage

- Adopting tools that offer flexible data controls and transparency to adhere to privacy rules and regulations

Identity management is a strategic imperative that plays a key role in determining a publisher's revenue, reach, and long-term competitiveness. Ensuring that their identity strategy is equally effective, efficient, and compliant is crucial for publishers to sustain audience monetization and advertiser trust.

Lay the Foundation for Identity Management Success

For publishers, a first-party identity graph is the central map for understanding their audience, tying together all the scattered information a user generates across the web. When someone visits a site on their phone, then their desktop, and later signs up for an email, they create multiple, isolated data points. The essential job of the graph is to perform identity resolution, a continuous process of matching and linking all those separate bits of data together. This creates a single, complete profile for that individual or household, giving the publisher a unified view of who the user is and what they do.

Building and owning this identity graph is crucial because it gives publishers full control over their audience data, reducing dependency on external signals. The identity graph becomes the central hub for managing and expanding first-party data, allowing publishers to strategically determine data sources, control privacy, and enrich the data with industry partners.

Crucially, leveraging an owned graph that’s flexible and extensible helps protect the business against programmatic changes. By resolving identities across all touchpoints, the graph maximizes addressable audiences and improves targeting accuracy, which ultimately strengthens the overall revenue strategy across both direct sales and programmatic channels.

To successfully implement a flexible identity foundation, publishers must start with strategic alignment across all teams and clear business goals. Successful organizations avoid siloing identity projects, recognizing they touch every corner of the business, from ad operations and data teams to legal stakeholders. This cross-functional approach ensures the identity graph is tied to measurable objectives and provides the strongest foundation for controlled addressability and revenue growth.

Key steps include:

- Forming a cross-functional workgroup to oversee requirements gathering, use case prioritization, and execution

- Developing well-defined, measurable KPIs (e.g., eCPM lift, match rate improvement, and time-to-integration of new sources) that are correlated with clear tracking methodologies to ensure progress and accountability

- Aligning with privacy regulations like GDPR and CCPA to build user trust and ensure compliance

By treating identity as a company-wide initiative, publishers establish the operational foundation for scalable, compliant, and effective strategies.

Choose the Right Data and Technology Partners to Maximize Value

For publishers, the true power of an identity graph lies in the quality and depth of the data fueling it. To enhance audience insights, optimize monetization strategies, and deliver better advertising outcomes, choosing the right data and technology partners is critical, ensuring the resulting system is scalable and can help with compliance.

Different types of partners help publishers maximize audience value by:

- Extending the reach and accuracy of a publisher’s identity graph by linking additional identifiers, such as universal IDs, hashed emails, or deterministic and probabilistic matching solutions. Identity enrichment partners help bridge identity gaps across devices and platforms, ensuring seamless audience recognition in both authenticated and anonymous environments.

- Offering demographic, behavioral, and interest-based data to complement first-party signals. Audience attribute enrichment partners (third-party data providers) enable deeper audience segmentation, allowing publishers to create more relevant and engaging ad experiences to be sold directly to their advertising partners.

- Helping publishers to elevate the quality and relevance of their ad inventory through enhanced audience attributes and refined segmentation. Audience curation partners often utilize advanced algorithms and expert oversight to curate premium inventory for advertising.

- Providing essential insights that enable publishers to understand the effectiveness of their advertising strategies and share those results with advertising partners. Measurement partners offer unique data sets and analytics capabilities that can help publishers prove the ROI of their advertising campaigns.

Best Practice

When testing multiple identity enrichment partners, establish a well-structured control group to measure incremental value accurately. Test partners one at a time to isolate their impact, or if testing with overlap such as 95/5, ensure your control group excludes enriched data from all providers. This approach prevents data duplication and gives a clearer picture of each partner’s unique contribution to audience reach and performance.

Unlock New Revenue Opportunities with Identity Graphs

Once a publisher’s identity graph is in place, it becomes a strategic asset that unlocks new monetization opportunities, enhances ad targeting, and strengthens publisher-advertiser relationships to drive revenue. By identifying use cases that maximize return on investment, publishers can transform their identity foundation into a competitive advantage, increasing bid values, improving addressability, and unlocking new revenue streams.

Leading publishers define high-ROI use cases and build their identity strategy around them. By clearly defining ROI-focused use cases, publishers avoid spreading resources thin and maximize the business impact of identity initiatives. Use cases that can unleash effective data monetization strategies include programmatic monetization and direct ad sales.

Examples of programmatic monetization include:

- Signal enrichment: Publishers can grow programmatic by adding valuable signals such as contextual metadata, user behavior, first-party insights, and data provenance to bid requests. This enables advertisers to target trusted audiences more precisely, increasing bid values and competition for inventory.

- Audience curation: Publishers can collaborate with data providers, agencies, or supply-side platforms (SSPs) to create premium, high-value inventory segments across multiple publisher-operated domains. These curated deals offer advertisers exclusive, high-performing inventory with enhanced targeting and measurement capabilities.

- Data marketplace monetization: Publishers can generate additional revenue by packaging and selling anonymized audience segments or contextual data through data marketplaces. Advertisers use this high-quality, privacy-compliant data to enhance targeting and improve campaign performance.

Direct ad sales use cases include:

- Increased addressability: Publishers can meet advertisers’ demands by leveraging first-party data and contextual insights to create compelling packages based on content dynamics and audience behavior. Developing audiences with first-party data and a strong identity foundation helps maximize advertiser reach while meeting audience targeting requirements.

- Audience enrichment: Publishers can grow direct ad sales by external data partnerships to build more valuable audience profiles. By providing advertisers with enriched audience targeting capabilities, publishers can justify premium pricing and improve campaign performance.

- Advertiser data collaboration: Publishers can work closely with brands and agencies to develop highly targeted campaigns, such as custom media plans, tailored audience segments, or unique measurement solutions. By aligning inventory and data with advertiser goals, publishers can offer differentiated, high-impact ad opportunities that drive better results.

Expand Beyond Programmatic Advertising to New Opportunities

Investing heavily in owned identity graphs and first-party data infrastructure will define the leaders in the next era of privacy-focused advertising. While maximizing programmatic and direct ad revenue is often the immediate driver, strong identity management offers much deeper organizational benefits. A composable and extensible identity graph not only unlocks short-term revenue gains but also creates long-term strategic advantages, leading to enhanced organizational efficiencies, better audience experiences, and the flexibility to explore valuable new opportunities with advertising partners.

Publishers are using their identity graphs to:

- Centralize advertising and marketing teams on common technology for optimal audience experiences: A strong identity infrastructure is key not only to advertising teams focused on monetization but also to marketing teams tasked with growing visitors and engagement to a publisher’s or media owner’s properties. To truly embrace content relevancy and personalization, both teams need information about their audiences.

- Break down data silos and create a deeper understanding of audience value: Identity graphs are a key input to business intelligence. The ability of an identity graph to tie audience behavior across various areas of a publisher’s network, as well as multiple sites, means it is crucial in their ability to understand audience lifetime value, acquisition costs, and other key metrics.

- Form partnerships for non-traditional methods of monetization: Many publishers and media owners possess incredibly unique insights about their audiences that can be valuable both to other publishers and to advertisers and even to adjacent businesses. Data clean room technologies can enable publishers to safely sync their data with other partners without sharing private information about their audiences. This puts publishers in a position to explore new forms of monetization, such as licensing agreements or data cooperatives, to establish new revenue streams.

- Power the next generation of agentic AI systems: By providing AI agents with a flexible, comprehensive identity foundation, publishers can facilitate the secure and efficient interaction of buy- and sell-side agents within data clean room environments.

Choose Optable for Identity Management

Optable is the identity management and data collaboration platform designed for the advertising ecosystem in the age of privacy. We empower media owners, publishers, and platforms to harness the power of their first-party data. Our platform makes it easy to assemble an identity graph across all sources, enrich it with leading partner data sets, and use the identity graph for activation in the channels that matter the most.

Ad sellers need a complete set of tools to support the many different ways that they monetize their audience data. For programmatic revenue, Optable makes it easy to plug into ID frameworks like UID 2.0, ID5, Yahoo Connect ID, and many others. Additionally, publishers with unique first-party audience data can port data into leading marketplaces like The Trade Desk and curation platforms such as Pubmatic and Index Exchange. This allows publishers to explore different monetization strategies and meet buyers where they are looking to activate.

Data-driven sales teams win more share of ad spend. Optable makes it easy for media owners to collect, analyze, and activate audience data, no matter how complex their network or distribution is. Optable’s purpose-built tools for signal enrichment, audience building, attribute enrichment, clean room collaboration, and agentic audience planning mean that sales teams can respond faster to RFPs, deliver more relevant audiences, and offer deeper insights to their advertising partners.

Optable’s identity solutions have transformed the way we manage data, offering unparalleled control and insights. We’ve seen significant improvements in yield optimization, making it an invaluable part of our programmatic strategy.” - Patrick McCann, Senior Vice President of Research, Raptive

Take control of your future in digital media with confidence. Contact Optable today to learn how our identity management solutions can help you!

This blog offers a brief overview of key findings from the State of Audience Data Monetization 2025 report, in partnership with Digiday. To explore all the survey results and insights, download the full report here→

As the digital advertising landscape faces its most profound transformation in over a decade, one fact has emerged for publishers: first-party data, identity, and partnerships are the new currencies of monetization.

The once-dominant and available third-party signals are fading, and the fragmented nature of audience signals has made addressability more complex than ever. In this shifting environment, publishers are being asked to do more than serve content; they must own, structure, and activate their audience data with precision and accountability.

Why Identity Is Now Central

Historically, publishers operated on a principle: “If we publish great content, people will come.” That still holds true. But as signal loss deepens and AI steals traffic, quality content alone isn't enough to drive revenue. Advertisers increasingly demand proof of audience value, and that starts with the ability to recognize, organize, and activate users at scale.

This is where identity graphs come in. Built on first-party signals like subscriptions, email logins, and on-site behaviors, identity graphs allow publishers to construct a unified, durable understanding of their audiences, without relying on cookies or opaque third-party data.

According to the 2025 Digiday x Optable survey, 78% of publishers are already using or building an identity graph, underscoring the urgent need to take control of audience recognition and monetization.

“By taking control of identity yourself — and not relying on browser-based cookies —publishers are better set up for the future. You own more of the way you connect with partners.”

— Paul Bannister, Chief Strategy Officer, Raptive

Building a Future-Proof Foundation

At Optable, we believe publishers shouldn’t have to choose between scale and privacy, speed and safety, or automation and control. Our platform was purpose-built for this moment, empowering publishers to:

- Build composable, interoperable identity graphs that bridge online and offline data.

- Resolve identities in real time across browsers, devices, and environments using trusted alternatives like UID 2.0 and Yahoo ConnectID.

- Activate audiences where it counts, including DSPs, ad servers, CTV, clean room collaborations, and more.

And we don’t stop at identity. With Optable’s Agentic Collaboration framework, autonomous AI agents help publishers go from audience planning to campaign activation in minutes (not weeks) while preserving privacy and governance every step of the way.

Why It Matters Now

As third-party identifiers disappear and AI is reshaping how content is discovered and monetized, owning audience identity is how publishers stay relevant—ensuring addressability, powering AI-driven workflows, and maintaining direct advertiser relationships.

In today’s market, identity is not just infrastructure. It’s a publisher's leverage. And in a world reshaped by AI and privacy, it’s the only sustainable currency publishers can truly own.

Want to see how it could work for your team?

Reach out to our team to book a personalized walkthrough or see a demo of Optable in action. Contact our experts now or email sales@optable.co to start the conversation.

The advertising world has officially entered the agentic era — a shift where specialized AI agents assist humans in planning, negotiating, and optimizing media buys. Yet, for all the excitement about their potential, one challenge remains: these systems don’t yet speak the same language.

AdCP, MCP, and WTF

To solve this problem, the consortium of adtech and media companies has started work on Ad Context Protocol (AdCP), an open standard that defines how AI systems communicate and collaborate across advertising platforms. AdCP gives agents the structure and context they need to work together accurately and efficiently. It is built on Model Context Protocol, or MCP, the underlying framework that allows AI models to connect to external systems securely.

Optable recently sponsored Digiday’s explainer video, which unpacks the MCP in detail. We recommend watching it to refresh your understanding of the protocol — the foundation for AdCP. Read the full article on Digiday →

What Does AdCP Mean for Publishers?

For publishers, AdCP is a gateway to participating in the next generation of digital advertising, powered by intelligent automation, transparency, and interoperability.

Those who move first gain an advantage — not only in speed and efficiency, but in access to a fast-growing ecosystem of AI-enabled buyers. Getting AdCP-ready early helps ensure your inventory and audiences are discoverable by these buyers as adoption accelerates.

AdCP enables direct, intelligent communication between publisher systems and advertiser agents. These agents assist their human directors in negotiating, planning, and executing campaigns directly — with clear rules and full auditability.

In practical terms, that means publishers can make their inventory, audiences, and packaging options discoverable to AI-driven buyers, all while maintaining control of their data.

AdCP standardizes how AI agents collaborate, serving as the connect-the-dots layer. It enables intelligent agents on both sides of the marketplace to understand and transact with each other — transforming publisher data into a dynamic source of value.

Why Did Optable Join the Initiative?

Optable joined AdCP as a founding member because the future of AI in advertising must be open, collaborative, and grounded in trust.

Our mission has always been to make it easier for the advertising ecosystem to analyze, connect, and activate its data. Through our Agentic Collaboration framework, Optable has already implemented AdCP-compatible workflows with pilot publishers.

Roll in to our beta program to implement the Optable Planner Agent — the AI-powered sales assistant that automates ad-planning workflows from RFP to activation, built on the same AdCP principles shaping the future of advertising.

We believe the advertising ecosystem only thrives when publishers retain ownership of their data and when innovation happens in the open. AdCP provides the structure to ensure a future where AI accelerates opportunity rather than creating new silos.

Why Publishers Should Get Involved

AI-driven buying is no longer theoretical; many leading companies are already experimenting with automated campaign planning and media activation. To stay visible to these new forms of demand, publishers need to ensure their inventory is accessible through AdCP.

Joining early doesn’t just provide access — it offers influence. Publishers that embrace agentic systems like Optable Planner today will be ready to support AdCP workflows as they are rolled out in the industry, enabling interoperability with buy-side agents. The benefits are tangible: improved operational efficiency, greater transparency in demand, and inclusion in the growing ecosystem of AI-native media buying.

Learn more about the initiative at: https://adcontextprotocol.org/

Becoming AI-Ready: Building on Strong Identity Foundations

AI readiness starts with data readiness. For agents to operate effectively, publishers need the right audience and identity infrastructure, along with control and compliance safeguards that keep data within trusted boundaries. Agents (almost) can’t do miracles, but they can help teams automate, streamline existing workflows, and break down silos. To do so, they require reliable data to transact. A strong identity and audience foundation are essential for benefiting from promising agentic capabilities.

That’s where Optable comes in. Our platform provides the identity solutions and safe environment publishers need to participate confidently in the agentic ecosystem.

Learn how to build an identity spine with Optable →

Key Considerations for Publishers Participating in the AdCP Initiative

Publishers using agents on the Optable platform can automate workflows while maintaining complete control over their data and decision-making.

Here’s what that looks like in practice:

- Data stays in your ownership: It never leaves your Optable tenant.

- No pooling: Your data isn’t mixed with anyone else’s.

- Secure access only: Optable Planner Agent users don't need to see or access user data directly — it builds relevant signals from marketing briefs written in natural language.

- Low sales overhead: Agentic workflows reduce manual sales effort to near zero.

- Full transparency: You can see which buyers are activating audiences and view their prompts to understand campaign intent.

- Data separation maintained: Each publisher’s data remains in its own deal; buyers receive a list of relevant opportunities to target from their DSP — never a blended dataset.

Building the Future of Agentic Advertising

Publishers are entering a new phase — one where AI agents can strengthen data value and elevate relationships with partners. Experimentation and adoption are already underway, and Optable is helping shape this transformation in the best interests of publishers.

By embedding the Ad Context Protocol within our Agentic Collaboration framework, Optable provides a secure, interoperable, and controllable environment where publisher and buyer agents can collaborate seamlessly.

In this evolving landscape, Optable serves as the identity and collaboration layer of agentic advertising, empowering publishers to turn intelligence into long-term advantage.

Reach out to our experts at sales@optable.co to learn more about agentic advertising.

Thank you to everyone who joined us for Optable Connect 2025. This year’s theme was clear: AI is moving from buzzword to backbone. Across keynotes, live demos, and candid panel debates, we saw how the industry is stitching together data, automation, and standards to create real business value for publishers, marketers, and technology partners.

We’re sharing the highlights, the big ideas, and practical takeaways you can bring back to your teams.

The Big Idea: Agentic Collaboration + Open Standards

In the opening, Optable's CEO, Vlad Stesin framed an industry in transition: more first‑party data, more privacy pressure, and faster AI cycles — with power steadily shifting toward publishers. The question is how to turn that shift into durable value. Our answer: Agentic Collaboration (human‑in‑the‑loop AI agents working across adtech workflows) and AdCP, the Ad Context Protocol, a standard that lets those agents and platforms talk to each other reliably. Learn more about AdCP.

Vlad Stesin laid out definitions and the vision of agent‑driven planning, activation, measurement, and optimization with people in control of the loop. Most importantly, he envoked in the audience a strong call to action - lets built this ecosystem together.

AI in Action: From Brief to Audience (and Back Again)

In the live product session Optable's CPO, Bosko Milekic, and Jeremie Lasalle Ratelle, CTO, showed Planner Agent working the way teams work: read a brief, propose audiences, find pre‑built segments, activate, and share relevant insights — and importantly, say “I don’t know” when it should.

We also previewed how AdCP underpins agent‑to‑agent and agent‑to‑platform communication, connecting buyer and seller agents through shared standards.

Takeaway: Bringing prompts to data — with standards for how agents exchange context and results — compresses planning cycles and keeps humans firmly in charge of strategy.

Session Highlights

1. AI in Media Buying: What’s real, what’s not, and where we’re going

Moderator: Vlad Stesin (Optable)

Speakers: Brian O’Kelley (Scope3), John Hoctor (Newton Research), Michael Driscoll (Rill Data)

This conversation separated real progress from hype. Themes included:

- Control vs. automation: Agent frameworks are useful only if they’re transparent and auditable; the buyer stays the editor‑in‑chief.

- Signals that matter: As identifiers fade, resilient signals (quality context, consented first‑party data, robust outcomes feedback) become the bedrock.

- Standards momentum: Agents need a common language; AdCP emerged as that connective tissue for planning and buying.

2. From Signal Loss to Signal Intelligence: Driving Performance with AI & First-Party Data

Moderator: Patrick Viau (Optable)

Speakers: Adam Heimlich (Chalice AI), Lindsay Van Kirk (People Inc), Chris Feo (Unity)

The group showed how to turn sparse, privacy‑safe inputs into actionable “signal intelligence.”

- AI fills the gaps by learning from patterns across contextual, behavioral, and first‑party signals — not by recreating invasive identifiers.

- Clean collaboration environments and data interoperability are now table stakes for responsible, high‑performance activation.

- Practical step: start by improving first‑party data quality (schema, freshness, event depth) before layering AI models.

3. How Content Owners Can Navigate Their Relationship With AI Giants

Presenter: Anthony Katsur (IAB Tech Lab) joined by Jonathan Roberts (People Inc.)

Anthony Katsur delivered a wake-up call for publishers facing signal and traffic loss in the age of AI. He explained how LLMs freely crawl and summarize the open web, disrupting the traditional value exchange that once drove site visits and revenue.

To address this, he introduced the Content Monetization Protocol (CoMP) — a new technical framework that enables publishers to set access terms, authenticate usage, and monetize AI queries. The initiative includes secure API-based communication, tokenized content tracking, and a phased rollout:

- Phase 1: Establish API controls and crawler access and discovery standards

- Phase 2: Build monetization models like cost-per-query

His main message: It’s time for publishers to get paid for the value their content brings to AI models.

4. Creating a Buyer & Seller Win‑Win With Curation

Moderator: Rob Beeler (Beeler.tech)

Speakers: Paul Bannister (Raptive), Lori Goode (Index Exchange), Kyle Vidasolo (Elcano)

Curation is evolving from “nice to have” to efficiency engine:

- Publishers gain yield, control, and transparency.

- Buyers get cleaner supply paths, clearer audience definition, and measurable outcomes.

- The most compelling examples came from publisher‑SSP‑curation partnerships, showing how audience + inventory packaging can outperform the open marketplace when executed with shared incentives.

.jpg)

5. Fueling Smarter Media: Data & AI with Hearst and The Weather Company

Moderator: Kristy Schafer (Optable)

Speakers: Jessica Hogue (Hearst), Felix Zeng (The Weather Company)

Two leaders shared what it looks like to be AI‑ready end‑to‑end:

- Data architecture first: Organize front‑end use cases (personalization and advertising) atop back‑end structures that make data ML‑ready.

- Context is a superpower: Weather‑driven and behavioral signals unlock relevant experiences and new monetization paths — from syndicating data to vertical partners to novel ad formats.

- Cross‑device and journey insights: Several examples showed how consolidating consented signals informs both UX and yield.

6. How the Data Landscape Is Helping Power the Next Era of Addressability

Moderator: Andrew Dumas (Optable)

Speakers: Jonathan Durkee (True Data), Mathieu Roche (ID5), Ryan Teshima-McCormick (MediaWallah)

The final panel discussed how the industry is adapting to a rapidly changing identity landscape, where cookies and device IDs are disappearing, and regulation is reshaping how data is used:

- The panel examined how adaptive, cross-channel identity frameworks are redefining addressability amid disappearing cookies and tighter regulations.

- Speakers agreed that fragmentation, not signal loss, is the core challenge, highlighting the need for standardized, interoperable approaches across CTV, mobile, and audio.

- First-party data and AI-driven probabilistic identity were cited as key enablers of more accurate, privacy-conscious targeting and measurement.

- The session concluded with a call for open, collaborative identity frameworks to create durable, future-ready addressability across the ecosystem.

Six Takeaways

- Make your data AI‑ready. Tighten schemas, enrich event depth, and ensure governance so agents can act confidently on your data.

- Adopt agentic workflows where they help most. Start with planning and audience construction; keep humans in the loop to set strategy and guardrails.

- Lean into curation. Package high‑quality audiences with transparent supply paths; align incentives so “win‑win” isn’t just a slogan.

- Build on open standards. Agents need a shared language — AdCP for collaboration across planning, activation, and optimization.

- Measure what matters. As signal fragmentation grows, optimize to outcomes and value, not vanity metrics. Our own results show that improved addressability can correlate with 35%+ CPM uplift when audiences get demonstrably more valuable.

- Prepare for the next era of addressability. Focus on interoperable identity frameworks, first-party data, and AI-driven probabilistic models to create durable, cross-channel addressability grounded in trust and transparency.

What’s Next

Our focus remains the same: help publishers and their partners monetize more effectively by connecting data, AI, and standards into a working system.

- We’ll continue to invest in enterprise‑scale identity and signal activation — the groundwork for intelligent workflows.

- We’ll deepen Agentic Collaboration — practical agents, transparent controls, and interoperability via AdCP.

If you joined us, thank you for the energy and open conversation. If you couldn’t make it, we hope this recap helps you catch up — and we’d love to keep the discussion going.

Let’s build the connective tissue of this new ecosystem — together.

Programmatic advertising, which automates the buying and selling of digital ads, has long relied on third-party cookies, which have enabled publishers to deliver personalized content to individuals based on behavior across websites.

However, with the combination of increasing global privacy regulations and major web browsers phasing out third-party cookies, the landscape of digital advertising is rapidly evolving. Understanding these shifts is essential for publishers and advertisers striving to balance privacy with effective ad targeting and campaign performance.

The decline of numerous signals including third-party cookies has left a significant gap in the digital advertising world, but Universal IDs are emerging as a powerful solution. Instead of relying on unreliable data like cookies, these new identifiers use trusted first-party information to recognize individuals across digital platforms.

This unified approach provides companies with more accurate identity resolution and better targeting capabilities. It also gives publishers a deeper understanding of their audience, which helps them enhance their advertising efforts. Ultimately, Universal IDs are a standardized, privacy-friendly solution that allows the entire ad tech ecosystem to navigate a modern, privacy-conscious landscape.

"Universal IDs are a valuable asset to consider. These solutions can unlock the ability to increase ROI, reach more users, and deliver campaign objectives across both cookie-based and cookieless environments. Ultimately, they replace the capabilities that cookies have been used for in a privacy-compliant way." – ID5

Types of Universal IDs: Understanding Your Audience to Enhance Targeting

Universal IDs may be built on various technologies and methodologies to enable consistent visitor identification across platforms, with each type offering a specific approach to recognition and privacy compliance. Some solutions also focus on interoperability, allowing IDs to work across different systems and environments.

Deterministic Universal IDs

Deterministic Universal IDs are generated from directly identifiable and verified data, such as email addresses, phone numbers, or login credentials – typically first-party data collected via logins or subscriptions. These identifiers are secured using cryptographic hashing or encryption, transforming the original data into anonymized, fixed-length strings to protect privacy. Because they rely on authenticated data, deterministic Universal IDs deliver high accuracy with consistent recognition of individuals across devices and platforms, provided proper consent is obtained. This approach provides a way for advertisers to target individuals across platforms with high precision while respecting privacy choices.

Probabilistic Universal IDs

Probabilistic IDs use statistical models and machine learning to infer identity based on patterns in behavior, device attributes, IP addresses, and other signals. Typically used to complement deterministic identifiers in hybrid approaches, probabilistic IDs are not tied to a single, verified identity – but are built on the likelihood that different signals belong to the same person. This approach is also useful when deterministic data is unavailable, such as environments where consented, first-party data is sparse. Publishers that don't have access to login data may rely on probabilistic IDs to improve targeting and attribution.

Publisher-Generated First-Party IDs

Publisher-generated first-party IDs are generated by publishers based on their first-party data. Often collected via logins, subscriptions, or on-site behavior, this data is then used to build audience profiles, setting the foundation for publishers to onboard their first-party data and connect it with advertisers' audiences. This approach maintains Identities within a publisher's network, offers secure, anonymized data that can be shared with approved partners, and can be mapped to other Universal ID systems via identity resolution.

Universal IDs vs. First-Party Data

While closely related, Universal IDs and first-party data are not the same thing, but they do work together in the evolving digital advertising ecosystem. First-party data refers to the information a company collects directly from its visitors through interactions such as subscriptions, purchases, surveys, feedback forms, and account registrations. This data is considered highly valuable because it's accurate, consent-based, and directly tied to real individual behavior and preferences.

First-party and offline data are often the foundational sources used to fully leverage Universal IDs. When a publisher collects an email address or login ID from an individual, this information can be hashed and used as the basis for matching with a Universal ID that enables consistent subscriber recognition.

A Universal ID is a unique, persistent identifier assigned to an individual, enabling recognition across various platforms, devices, and ad tech systems, without relying on third-party cookie syncing. These IDs are usually created by ad tech vendors, identity solution providers, or industry coalitions.

What sets Universal IDs apart is their interoperability, allowing various participants in the advertising ecosystem – publishers, supply-side platforms (SSPs), demand-side platforms (DSPs), and advertisers – to recognize and target the same individual across channels with consistency and precision.

The Importance of Universal IDs: Navigating The Decline of Cookie Advertising

Driven by the industry-wide shift away from third-party cookies, Universal ID solutions have emerged as a sustainable and privacy-forward solution for identity resolution. The ecosystem, enabled by Universal IDs, empowers both publishers and advertisers to deliver secure experiences across the digital advertising supply chain. By leveraging first-party data collected directly from subscribers, Universal IDs create a consistent, shared identity, enabling effective targeting, improving user experience, and allowing advertisers to serve relevant ads across platforms.

Traditionally, disparate IDs had to be synchronized to recognize a person across platforms. Because there's no single standardized identifier shared across platforms in the traditional cookie-based ecosystem, the cookie-syncing method creates latency and diminishes performance. This complicated syncing process not only increased the risk of data loss but also slowed page load times and reduced match rates due to fragmentation and a lack of standardization.

By combining anonymized data with cross-domain tracking capabilities, Universal IDs eliminate the need for complex and inefficient cookie-syncing processes, acting as a unified identifier that all approved parties can use. This reduces development overhead and improves efficiency in data matching, ad delivery, and performance measurement.

Benefits of Universal IDs

- Eliminate reliance on third-party cookies and cumbersome data-syncing services

- Enhance data accuracy and identity resolution, delivering better match rates

- Reduce duplication and enable precise sample sizes for ad targeting

- Securely store authenticated first-party data on dedicated platforms

- Align ad targeting more closely with individual interests for improved campaign performance and more relevant ad delivery

- Increase revenue opportunities through higher-value impressions

- Ensure accurate cross-platform recognition without relying on device-specific cookies

- Empower marketers to offer visitors meaningful value (loyalty programs, personalized experiences, gated content, etc.) in exchange for their information

The Future of Universal IDs: Navigating the Cookie-Less Future

Major browsers are already blocking third-party cookies and IPs, and publishers and advertisers are under increasing pressure to find sustainable alternatives for identity resolution. With the void left by third-party cookies, Universal IDs offer a more robust, future-proof, and standardized approach to identity resolution. They have been steadily gaining traction and emerging as one of the most promising tools in this evolving landscape.

While the groundwork for Universal ID adoption is well underway, the ecosystem is still maturing. Key challenges remain around interoperability, industry-wide standardization, and ensuring compliance and transparency.

Universal IDs represent a strong foundation for the future of personalized advertising in a privacy-first world. As the complete phaseout of third-party cookies approaches, the effectiveness and adoption rate of Universal IDs will become even clearer. However, the true impact will only be known once cookies are fully deprecated across all major platforms.

Enhancing Addressability with Optable

With a unified, privacy-compliant framework that can work across the open web and programmatic ad channels, Universal IDs enable precision targeting, consistent user experiences, and higher match rates for advertisers.

With cookies on their way out, Optable enables media owners to create premium, privacy-safe media activations. Get in touch with us to learn how we power seamless compatibility with the advertising ID ecosystem.

Read more about how Optable turns data into opportunity with Universal ID solutions.

When publishers think about their identity spine, the focus is often on coverage, accuracy, and match rates. But there is another dimension that’s just as critical, and often overlooked: flexibility.

Your audience data, your AdTech stack, and your business goals are always evolving. What you decide today as your “primary” identifiers might not be the right choice six months from now. The question is, when those needs change, do you have the freedom to adapt on your terms, or are you locked into a costly, time-consuming rebuild?

The problem with rigid graphs

In most identity graph implementations, the structure is fixed the moment data is ingested. Your linkage rules, such as which IDs are considered primary and which are fallback, are baked into the schema. If you want to make a change, you often need to:

- Re-ingest and re-process your entire visitor dataset

- Rebuild linkages from scratch

- Pause other projects while the engineering team manages the migration

For publishers managing large volumes of historical and real-time 1st party data, this rigidity creates operational drag. Every re-ingest is costly in terms of infrastructure resources, internal effort, and time to market.

Flexibility by design with Optable

With Optable, flexibility is built into the core of how identity resolution works. You can change primary identifiers at any time, adjust linkage rules to accommodate new data sources or advertising use cases, and apply different scopes of resolution. Scopes give you the freedom to tailor how identity is managed for different objectives, ensuring that activation and enrichment each work the way you need, without reloading or migrating data.

The Optable platform is built around schema on read. Instead of locking your data into a rigid structure when it is ingested, the platform continuously evaluates and resolves your ID graph data into a normalized list of visitor records based on your latest configuration every time it is accessed.

Why schema on read matters

For the non-technical stakeholders among us, schema on read means the platform interprets the data’s structure at query time rather than load time. This is especially advantageous in a fragmented publisher environment because it:

- Works with mixed data sources, including batch, real-time, and warehoused

- Adapts instantly when new identifiers or linkages are introduced

- Avoids downtime and heavy ETL cycles when changes are needed

For programmatic and yield leaders, this translates into agility. You can test new audience strategies, onboard different partners, or pivot targeting rules without waiting on long engineering sprints.

Adapting as you learn

Your identity strategy will evolve as you learn. You might start with hashed emails as your primary key, then later shift to a deterministic on-site identifier, or you might add probabilistic linkages for specific activation use cases.

With a flexible identity graph hosted by Optable, those changes are configuration updates, not re-architecture projects. Scopes make this adaptability even more powerful: you can fine-tune identity resolution for one use case without disrupting another. That means you can test, learn, and optimize with confidence, improving performance while keeping operations streamlined.

The takeaway

A flexible identity graph is not just a technical convenience; it’s a business advantage. It allows you to:

- Respond faster to changes in data availability and privacy regulations

- Unlock new monetization and activation use cases faster

- Align identity resolution with your business goals using configurable scopes

- Reduce the operational overhead of managing your audience data

If you are building or rethinking your identity solution, ask yourself: will this graph adapt with me, or will it hold me back? With Optable, you can ensure it is built to adapt.

For many publishers, there are really two stories of AI.

The first is the dark one: the story of web publishers whose content is scraped, remixed, and redistributed without compensation. It threatens the very foundation of the open and free web. A web that, in large part, is funded by advertising.

The second story is brighter, and it’s the one I want to focus on here. It’s the story of publishers and advertisers leveraging modern AI. More specifically, large language models (LLMs), autonomous agents, and expert assistants that can help to better align marketing goals with audiences.

Both stories matter. But let’s stay with the second one for a change.

Why AI?

When we first started experimenting with LLMs, my mental model was simple: imagine platform workflows augmented by a digital advertising version of Clippy. Smart assistants that could translate natural language into SQL queries, run an audience model, or generate a campaign report. Some of these assistants would be useful, others less so, especially since, as I’ve learned, humans will do almost anything to avoid having to talk to a bot. But the picture was still one of “tools that help humans get stuff done.”

Now that we’ve been at this for some time, the more exciting question has emerged: what happens when these assistants aren’t just helpers, but collaborators?

Allow me a quick digression. Having been around digital advertising for a while (and perhaps this is the old man in me talking), I remember when advertisers and publishers actually talked about ad campaigns. Communication often happened over email, sometimes on the phone. Video calls weren’t the norm yet. The cast of characters included publisher sales reps and media agency buyers, with ad ops, analysts, and data teams on both sides.

Sounds inefficient, right? And it was. But there was a benefit to that complexity: when decent people talk directly, trust and accountability come built in. You tend to share more about your actual goals. You try harder to make things work. Reputation and repeat business were always on the line.

Fast forward to today: real-time auctions have brought incredible efficiencies, but they’ve also eroded some of that organic incentive alignment. The frictionless protocol stripped away some of the humanity and creativity that is core to advertising.

So here’s the emerging question: putting aside cost of sale and scale challenges for a moment, what if advertisers and agencies could once again design and run campaigns by interacting more directly. Not just with humans, but with agents acting on behalf of humans, using the same kind of natural language to exchange thoughts and ideas and help align goals and objectives? Could this better align advertiser goals with audiences? Could it make advertising feel better, behave better, work better?

That’s worth getting excited about.

What We’ve Learned So Far

Working with LLMs has taught us something both obvious and profound: they are dazzling at simulation, but shallow at self-awareness. They can produce fluent, convincing responses and even mimic reasoning, but they have no reflexive capacity to recognize when they’ve gone astray. The outputs can be brilliant in one moment and dangerously wrong in the next, with equal confidence.

This duality means that while LLMs can simulate many human workflows, from drafting creative copy to parsing data schemas, they must be deployed carefully. They thrive when given the right scaffolding: a contained problem space, clear context, and a well-bounded objective. Left unconstrained, they risk drifting, fabricating, or amplifying error.

That’s why the path forward hasn’t been about building one all-knowing digital assistant, but rather assembling constellations of specialized agents. Each is trained or prompted for a narrow domain of competence: a media planning agent, a data transformation agent, an audience segmentation agent. These agents are better behaved precisely because their world is smaller and the teams building them can impart context and create clear guardrails based on deep domain knowledge. Instead of asking them to “understand everything,” we’re asking them to execute well-defined tasks within a shared environment.

Of course, even narrow specialists are only as useful as their ability to work together. And this leads to the bigger frontier.

Agentic Collaboration in Digital Advertising

If specialized agents are the components, collaboration is the system. Real work rarely lives inside the walls of a single agent. Planning a campaign, for instance, may begin with a creative brief that must be interpreted by one agent, handed to another to model against audience data, and then routed to yet another to generate media plans and validate them against inventory. Without coordination, this quickly becomes chaos.

This is why the idea of Agentic Collaboration is so compelling. It is not enough to build competent agents; we need ways for them to communicate, to delegate, to negotiate, and to reconcile. Inside an organization, this means establishing frameworks where multiple agents can operate on the same context, share state, and pass tasks fluidly without losing fidelity. Across organizations, the challenge becomes even more interesting: what happens when an advertiser’s agents need to converse directly with a publisher’s agents, or when third-party specialist agents need to be introduced into the workflow?

At that point, protocols matter. Just as real-time bidding was only possible once the industry coalesced around shared protocols and standards for describing inventory, price, and demand, agentic collaboration will require structures for intent, context, and trust. Compelling new protocols such as MCP and A2A have emerged to support a new infrastructure across our industry. If agents are to transact meaningfully on behalf of their human principals, we will need conventions for verifying what they can and cannot do, and for ensuring that their exchanges reflect not just efficiency, but accountability.

The product implication is enormous. Platforms like Optable’s are no longer just facilitating data exchange or workflow automation; they are becoming the medium in which agents collaborate. That means exposing enough functionality and data to make collaboration useful, while constraining enough to keep it safe and aligned. It means thinking carefully about how agents identify themselves, how they signal authority, and how they fail gracefully when they don’t know the answer.

If this sounds familiar, it should. In some sense, we are circling back to the kind of direct communication that once characterized the industry only now, the conversations are mediated by software agents that can operate at machine scale and speed. Done well, this can fundamentally upgrade the methods to establish trust, alignment, and shared understanding in digital advertising.

What We’re Up To

At Optable, our starting point was building a platform where publishers and advertisers could collaborate transparently on data, without intermediaries diluting the signal or hoarding the value. That same conviction now extends naturally into the world of AI.

Agentic collaboration, to us, is not about replacing humans. It’s about restoring what was lost when programmatic scaled: the trust and alignment that come from two parties working directly toward a shared objective. The difference is that now, agents can help scale those conversations across thousands of campaigns, billions of impressions, and an ecosystem that demands both speed and precision.

Our role is to provide the medium where this can happen safely, particularly from the perspective of publishers, the creators that make the free internet possible, and the custodians of user and audience data. That means building the infrastructure where specialized agents, some ours, some yours, some built by our partners, can meet, exchange, and work together under clear rules. It means designing protocols that enforce accountability and safeguard data, while allowing creativity and experimentation to flourish. And it means ensuring that when agents collaborate across organizational boundaries, they are still serving the fundamental goals of the humans they represent.

This isn’t just a product roadmap. It’s a philosophy. Advertising at its best has always been about connection: between brand and audience, between publisher and advertiser. By enabling agentic collaboration, we have the opportunity to bring that connection into the AI era not by automating away the conversation, but by amplifying it.

We’re still at the beginning of this journey, but beginnings matter. And if history has taught us anything, it’s that the structures we design now, meaning the protocols, the incentives, the norms, will shape the industry for decades to come. Our intention is to help shape them in a way that makes advertising more accountable, more collaborative, and, ultimately, more human.

.png)

.png)

.jpg)

.png)